Listcrawing – Listcrawling, a specialized form of web scraping, targets lists on websites to extract valuable data. This technique focuses on structured data within ordered, unordered, or definition lists, offering a more efficient approach than general web scraping. Understanding listcrawling involves mastering techniques for identifying and extracting this data, while navigating ethical and legal considerations crucial for responsible data acquisition.

From e-commerce price comparisons to academic research, listcrawling finds diverse applications. This article delves into the methods, challenges, and future trends of this powerful data extraction method, providing a comprehensive guide for both novice and experienced web scraping practitioners.

List Crawling: A Deep Dive into Web Data Extraction: Listcrawing

List crawling, a specialized form of web scraping, focuses on efficiently extracting data organized in lists from websites. This technique is crucial for various applications, from price comparison engines to academic research projects. This article will explore the definition, techniques, ethical considerations, applications, challenges, and future trends of list crawling.

Definition and Scope of List Crawling

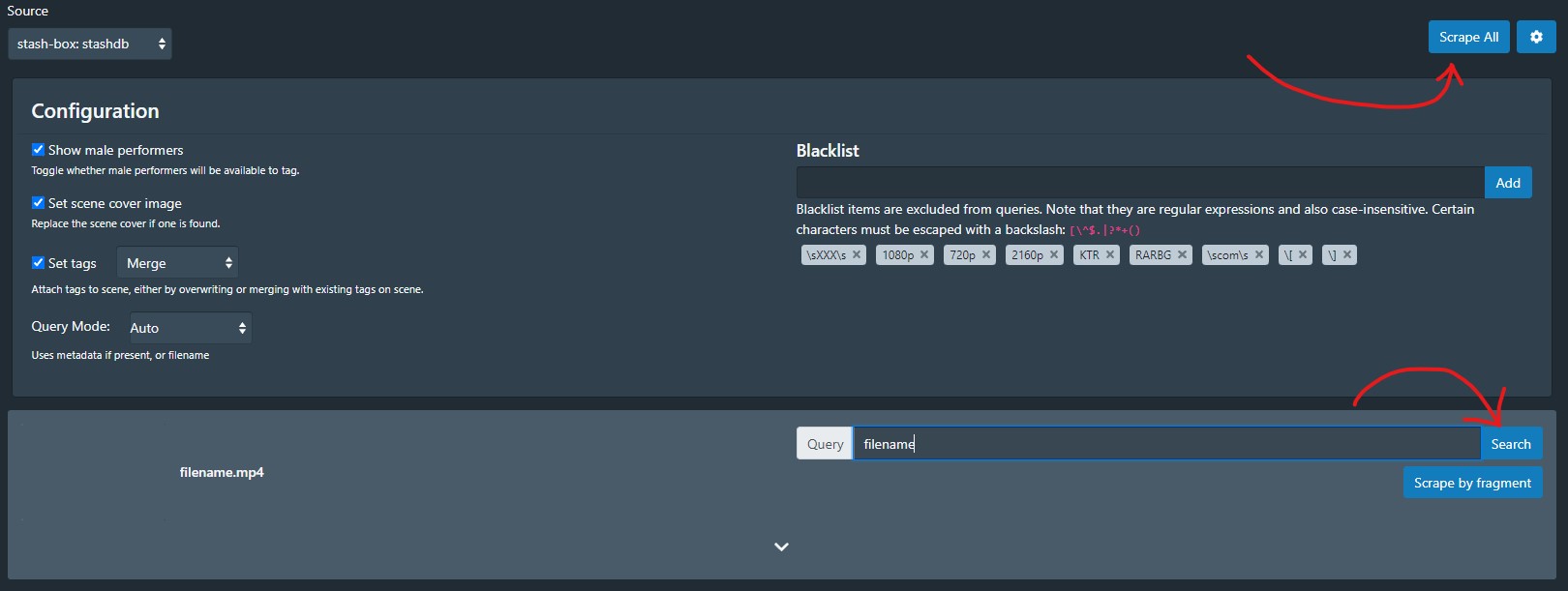

Source: github.io

Listcrawling techniques are constantly evolving, adapting to changes in website structures and data formats. The popularity of certain video types, such as those found in the compilation blackheads videos 2022 , influences how crawlers are designed to efficiently target and index relevant content. Therefore, understanding these trends is crucial for effective listcrawling strategies.

List crawling is the process of systematically extracting data from lists presented on websites. These lists can take various forms, including ordered lists (

- ), unordered lists (

- Always check the website’s robots.txt file.

- Respect the website’s terms of service.

- Implement delays between requests to avoid overloading the server.

- Use rotating proxies to distribute the load.

- Anonymize collected data to protect user privacy.

- Obtain explicit permission when necessary.

- ), and definition lists (

- ). Unlike general web scraping which might target diverse data structures, list crawling concentrates solely on extracting list items, often aiming for specific data points within each item. This targeted approach allows for efficient data collection and processing.

List crawling is distinguished from general web scraping by its focus. General web scraping might extract all kinds of data from a page, while list crawling is specifically designed to handle lists. The efficiency gains from this specialization are significant, especially when dealing with large datasets.

Examples of websites frequently employing list crawling include e-commerce platforms (product listings), news aggregators (article summaries), and job boards (job postings).

| Website Example | List Type | Data Extracted | Potential Applications |

|---|---|---|---|

| Amazon.com | Unordered List (

|

Product names, prices, ratings | Price comparison, market research |

| Google News | Ordered List (

|

Headline, source, publication date | News aggregation, sentiment analysis |

| Indeed.com | Unordered List (

|

Job title, company, location | Job search, recruitment analysis |

| Wikipedia | Definition List (

|

Term, definition | Knowledge base creation, data mining |

Techniques and Methods Used in List Crawling, Listcrawing

Several libraries and tools facilitate list crawling. Popular choices include Beautiful Soup (Python), Scrapy (Python), and Cheerio (Node.js). These tools provide functionalities for parsing HTML and extracting data efficiently.

Regular expressions are frequently employed to identify and extract specific data points from list items. For instance, a regular expression can be used to isolate prices from product descriptions within an unordered list.

Handling nested lists and complex list structures requires careful consideration. Recursive algorithms or iterative approaches can be used to navigate these structures and extract the desired data. A well-structured approach is essential to prevent errors and ensure data integrity.

A simple algorithm for extracting data from a list containing hyperlinks might involve iterating through each list item, extracting the hyperlink’s href attribute, and potentially the link text. This data can then be stored and processed further.

Ethical and Legal Considerations

Ethical list crawling involves respecting website terms of service and adhering to data privacy regulations. Many websites explicitly prohibit scraping, often including clauses in their robots.txt files or terms of use agreements. Ignoring these restrictions can lead to legal repercussions.

Examples of websites with strict anti-scraping policies are numerous and often vary in their enforcement. Some sites employ sophisticated techniques to detect and block scraping attempts.

Responsible list crawling requires respecting robots.txt directives, implementing politeness mechanisms (e.g., delays between requests), and avoiding overloading the target website’s servers. Protecting user privacy by anonymizing collected data is also crucial.

Applications and Use Cases

List crawling finds applications across various domains. In e-commerce, it’s used for price comparison and competitor analysis. Researchers utilize it for data collection in academic studies. Data analysts employ it for market research and trend identification.

For example, a price comparison website might use list crawling to collect pricing data from various online retailers. This data is then used to provide consumers with a comprehensive overview of available options and their respective prices.

Imagine a project aiming to analyze the popularity of specific s across various news websites. List crawling could be used to extract articles containing those s from each website’s list of recent articles. The frequency of the s across different news sources can then be analyzed to understand their relative importance and public perception.

List crawling offers significant benefits in data acquisition, enabling efficient and automated data collection from large datasets. However, limitations exist, including the need for careful consideration of website structures and the potential for data inconsistencies.

Challenges and Limitations

Website structure changes, dynamic content loading via JavaScript, and anti-scraping measures pose significant challenges to list crawlers. Websites frequently update their layouts, rendering existing scraping scripts obsolete. Dynamic content requires sophisticated techniques to handle JavaScript rendering.

Overcoming these challenges involves using techniques like proxies to mask the crawler’s IP address, employing headless browsers to render JavaScript, and implementing delays between requests to avoid detection. Regularly monitoring website changes and adapting scraping scripts accordingly is crucial.

| Challenge | Mitigation Strategy |

|---|---|

| Website Structure Changes | Regularly monitor website updates and adapt scraping scripts accordingly. |

| Dynamic Content Loading | Use headless browsers or JavaScript rendering libraries. |

| Anti-Scraping Measures | Use proxies, implement delays, and rotate user agents. |

| Data inconsistencies | Implement data validation and cleaning processes. |

Future Trends and Developments

The future of list crawling will likely involve more sophisticated techniques for handling dynamic content, evading anti-scraping measures, and managing large-scale data extraction. Advancements in AI and machine learning will play a key role in automating the process and improving accuracy.

Predicting the future of list crawling requires considering the ongoing arms race between web scrapers and website owners. More sophisticated anti-scraping techniques will likely be developed, prompting further innovation in list crawling methodologies. The ethical considerations will continue to be paramount, demanding responsible development and deployment of list crawling tools.

Ending Remarks

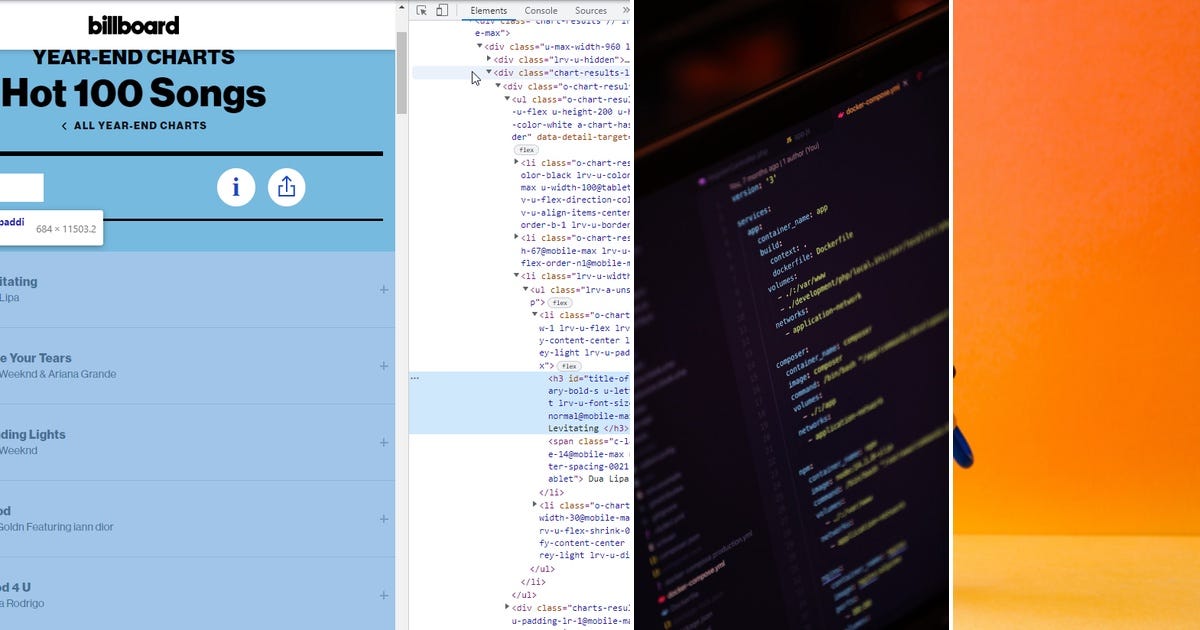

Source: medium.com

Listcrawling presents a powerful tool for data extraction, but responsible use is paramount. By understanding the ethical considerations, mastering the techniques, and anticipating future challenges, practitioners can leverage listcrawling for beneficial applications while respecting website policies and user privacy. The future of listcrawling hinges on adapting to evolving website designs and anti-scraping measures, highlighting the need for continuous innovation and responsible practice within this dynamic field.